Overview

Replication is a feature available in Wave Analytics and is used for decoupling the data from your dataflow. This feature allows you to extract data on a separate schedule. By scheduling the extracts, the dataflows have less to do and run faster. When you enable the replication option, then you can identify any additional actions that are available for the replication.

Features of Data Replication

- Create multiple Dataflows.

- Schedule the replication to run the dataflows.

- Automatically update the replications.

- Remove Fields.

- Add Filter rows.

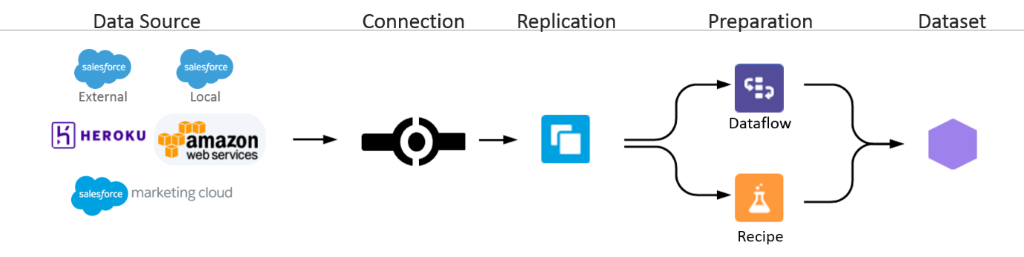

What is Data Replication?

It’s a stored set of extracted data or a set of steps that you want the dataflow or replication to perform from an existing source. We can view and edit our replication settings, and create dataflows, and more from the data manager tab.

Fig: Data Replication Model

Enable Replication

Setup [Symbol] Enter “Wave Analytics “in the Quick Find box, [Symbol] Settings[Symbol] Enable Replication [Symbol] Save.

Once we enable the replication, we can view the Salesforce objects, setup a replication schedule, and run the replication.

Create a Dataflow (Replication)

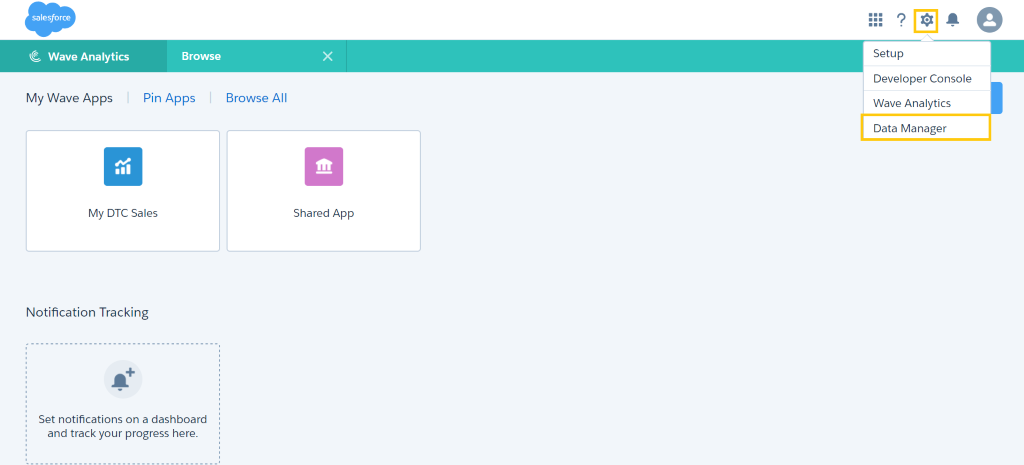

Step 1: Go to Force.com App Menu [Symbol] Wave Analytics [Symbol] Click Settings Icon[Symbol] Choose Data Manager

Fig: Select Data Manager window

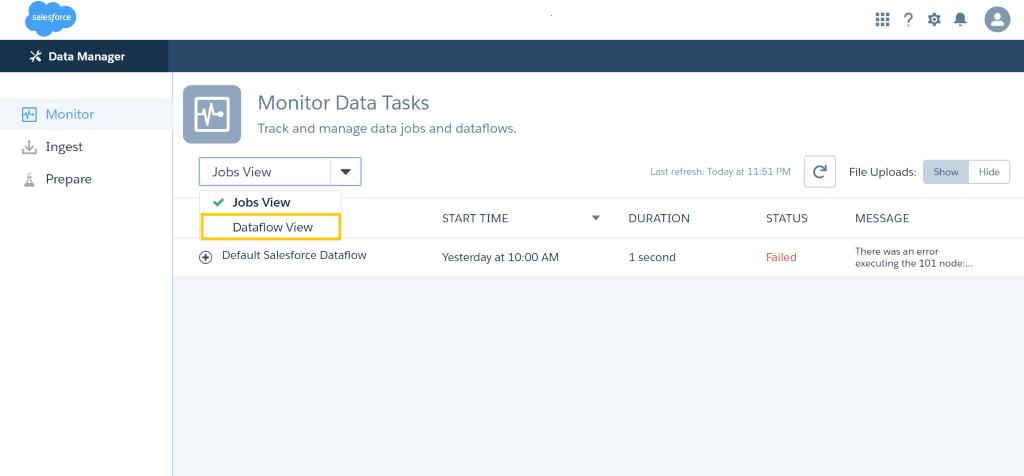

Step 2: Click Dataflow tab; then click Create Dataflow

- Here, we must create new Dataflow from Data Manager Window by clicking the drop-down list and select the “Dataflow View “option.

- It will be adding the transformations directly to the dataflow definition file.

- This file is a JSON format that contains transformations that represent the dataflow logic.

- Dataflow definition file is saved with UTF-8 coding.

Fig: Data flow View

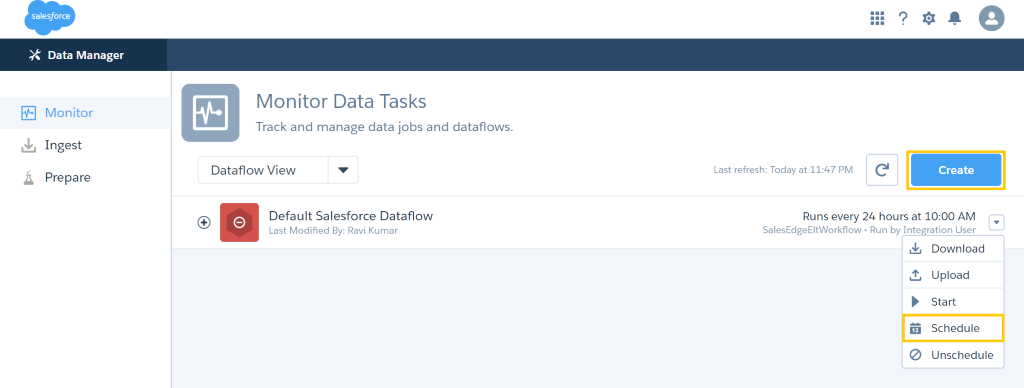

Step 3: Click the schedule if you want to start the schedule for the dataflow.

- After we run a dataflow job for the first time, it will run as per the daily schedules, by default.

- We can schedule the dataflow with an interval of an hour, week or month.

- We can also unschedule the dataflow.

Fig: Schedule Data flow

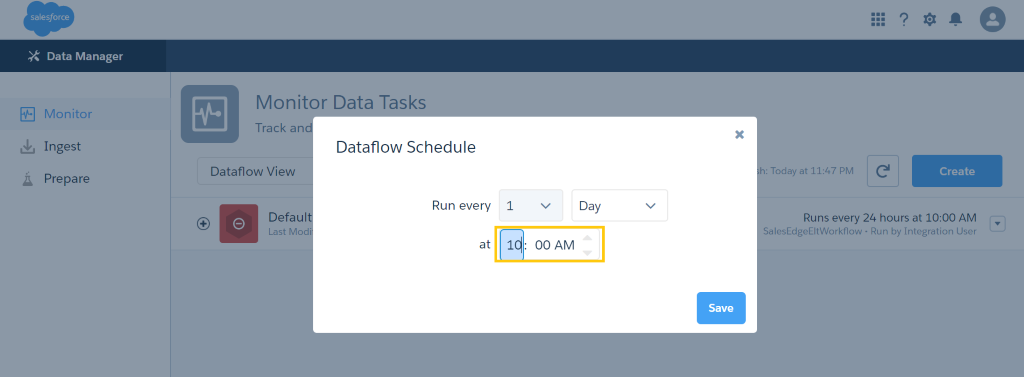

Step 4: Enter the day and hours in schedule Dataflow window; then click save button.

Fig: Schedule Data flow (Day and Hours)

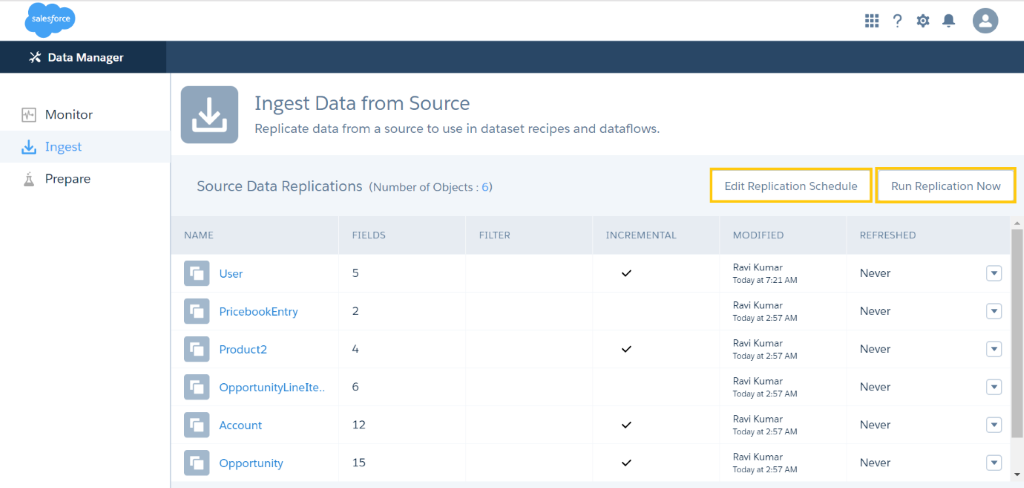

Step 5: We can Edit and Run the replication in the source data replication window

We can edit the existing replication and run the replication at a specific time.

Fig: Edit and Run the Replication

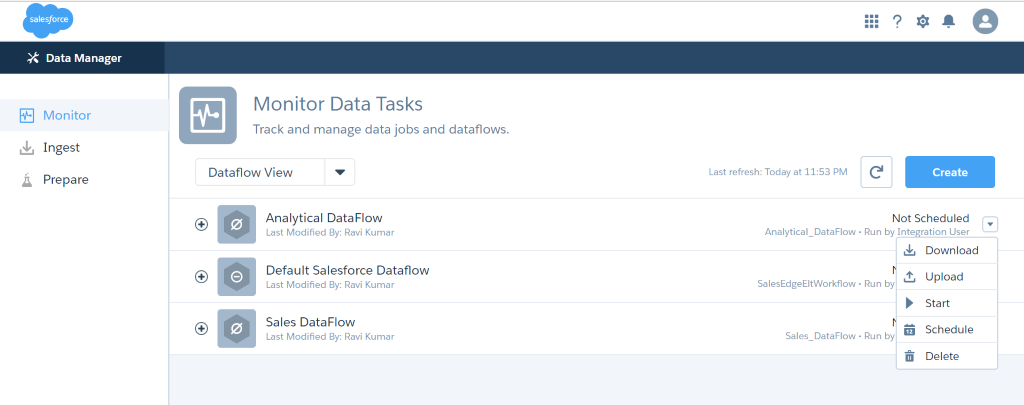

Step 6: We can create more than one Dataflow Replication at a time.

- We can create multiple dataflows for different purposes and run them on their own schedules.

- We can break large dataflows into smaller ones to build datasets faster.

- We can delete unwanted dataflows and disable them to temporarily stop them from running.

Fig: Many Dataflow Replication

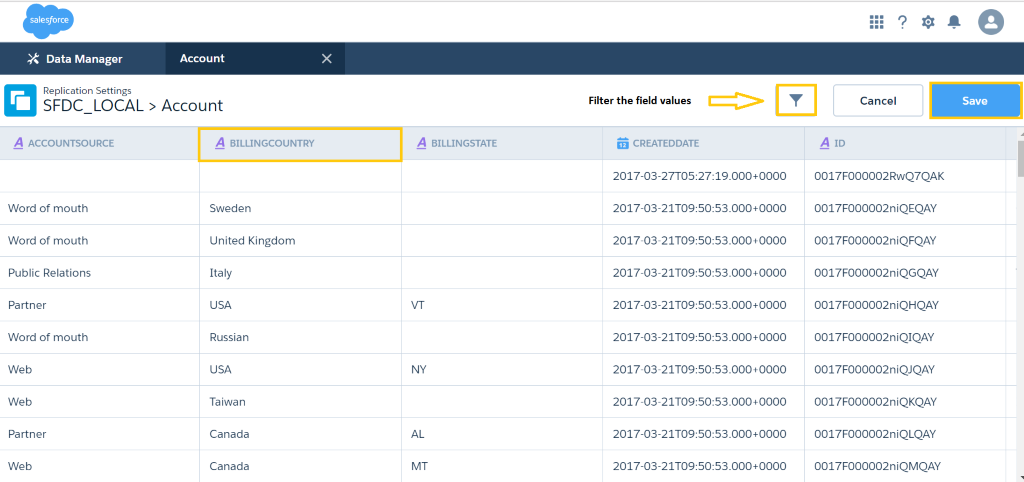

Step 7: Add the filters in Replication Settings window

- We can create replication filters to filter the field values and drill down the results.

- We can disable replication for a field here too. Hover the field column and click the cross option.

- Here, we can add more fields and remove the fields.

Fig: Adding filters

Pros:

- Increased reliability and availability.

- Replicated copies of data is always faster.

- Less communication overhead.

Cons:

- More storage space is needed.

- Update operation is costly.

- Maintaining data integrity is complex.

Summary

This article illustrates the process of optimizing your Dataflow efficiently and schedule the Dataflow replication in our Wave analytics. Also, creating the multiple dataflows with replication is made easy.

Reference Link